Segmentation

Understanding image segmentation methods and their broad applications

What is Image Segmentation?

Image segmentation is the process of partitioning an image into coherent, meaningful regions. In essence, segmentation algorithms seek to simplify or change the representation of an image to make it more understandable and easier to analyze. Rather than considering every pixel individually, segmentation allows us to group pixels that share certain characteristics (e.g., intensity, color, texture) into larger regions that may correspond to objects, organs, or areas of interest.

Segmentation is a cornerstone of many computer vision and image processing pipelines—particularly in medical imaging, autonomous systems, and remote sensing. By isolating structures like tumors, roads, or defects, we can perform higher-level tasks such as object recognition, volumetric measurement, or anomaly detection.

Why is Segmentation Important?

Segmentation drastically reduces the complexity of subsequent image analysis steps and forms the backbone of many critical applications:

-

Object Detection:

Precise segmentation helps in accurately localizing and classifying objects within an image or video, which is vital for surveillance, robotics, and industrial inspection. -

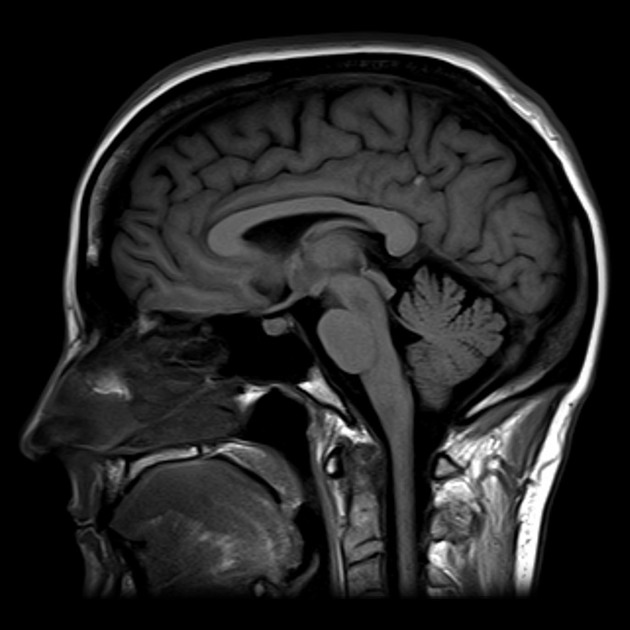

Medical Diagnosis:

By delineating structures such as organs or lesions in medical scans (CT, MRI, Ultrasound), segmentation aids in treatment planning, disease monitoring, and surgical guidance. -

Autonomous Systems:

Self-driving cars, drones, and service robots rely heavily on robust segmentation to understand road layouts, detect pedestrians, and navigate safely. -

Quality Control:

Automated inspection lines in manufacturing utilize segmentation to highlight defective items, chips, or irregularities.

Types of Image Segmentation Techniques

Segmentation techniques can be broadly classified according to the core principle or algorithmic approach they employ. Below are several common categories:

1. Thresholding-Based Segmentation

Thresholding is one of the simplest yet most widely used segmentation strategies. It converts an image from grayscale (or color) to a binary image, differentiating foreground (objects) from the background by setting a cut-off intensity value (the threshold).

-

Global Thresholding:

Uses a single threshold value for the entire image, typically determined by analyzing the overall histogram. Most effective when the foreground and background intensities are well-separated. -

Adaptive (Local) Thresholding:

Computes threshold values for small sub-regions in the image. Useful when illumination or contrast varies across different parts of the image. -

Otsu’s Method:

An automatic approach that selects an “optimal” global threshold by minimizing the within-class variance of the two segmented groups. Often used as a robust default method in applications where no prior knowledge of threshold is available.

import cv2

# Load grayscale image

image = cv2.imread('image.jpg', cv2.IMREAD_GRAYSCALE)

# Apply Otsu's thresholding

_, thresholded = cv2.threshold(image, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

cv2.imwrite('thresholded.jpg', thresholded)

% Load grayscale image

image = imread('image.jpg');

grayImage = rgb2gray(image); % Convert to grayscale if needed

% Apply Otsu's thresholding

level = graythresh(grayImage); % Compute Otsu's threshold (normalized 0-1)

thresholded = imbinarize(grayImage, level); % Apply threshold

% Convert logical to uint8 for saving (0 or 255)

thresholded = uint8(thresholded) * 255;

imwrite(thresholded, 'thresholded.jpg');

2. Edge-Based Segmentation

Edge-based methods locate objects by identifying discontinuities or sharp intensity transitions in an image. Edges often mark the boundaries between distinct objects or regions.

-

Sobel Operator:

Computes intensity gradients in the horizontal and vertical directions. The resulting gradient magnitude highlights edges. -

Canny Edge Detector:

A multi-stage algorithm that includes noise reduction, gradient calculation, non-maximum suppression, double-thresholding, and edge tracking by hysteresis. Considered one of the more robust classical edge detection methods.

# Canny Edge Detection

edges = cv2.Canny(image, 50, 150)

cv2.imwrite('edges.jpg', edges)

% Apply Canny edge detection

edges = edge(grayImage, 'Canny', [50 150]/255);

% Convert logical result to uint8 (0 or 255) for saving

edges = uint8(edges) * 255;

imwrite(edges, 'edges.jpg');

3. Region-Based Segmentation

Region-based methods group neighboring pixels into regions based on similarity criteria (e.g., intensity, texture, color). Unlike edge-based approaches, which focus on boundaries, region-based segmentation attempts to determine the homogeneous areas directly.

-

Region Growing:

Starts from one or more “seed” pixels and expands outward as long as neighboring pixels fulfill a similarity condition (like intensity difference). This simple approach can be effective if seeds and criteria are well chosen. -

Watershed Algorithm:

Conceptualizes the grayscale image as a topographic surface. Regions “flood” from the minima, and barriers (watershed lines) form where floods from different minima meet. Particularly effective in scenarios where clear intensity ridges separate distinct objects.

# Watershed Algorithm (simplified illustration)

import cv2

# Assume 'image' is a loaded BGR image

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Basic threshold for foreground detection

_, binary = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)

# Save binary result (in practice, additional steps needed for watershed)

cv2.imwrite('watershed_result.jpg', binary)

% Apply Otsu's thresholding with inverse binary mode

level = graythresh(grayImage);

binary = imbinarize(grayImage, level);

binary = ~binary; % invert binary (like THRESH_BINARY_INV)

% Save result

binary_uint8 = uint8(binary) * 255;

imwrite(binary_uint8, 'watershed_result.jpg');

4. Clustering-Based Segmentation

Clustering-based segmentation is an unsupervised learning approach, commonly used when the number of classes or segments is known in advance (or we wish to explore multiple possible segmentations). It groups pixels into clusters based on their feature vectors (e.g., intensity, color, texture).

-

K-Means Clustering:

Partitions data into \(k\) clusters by minimizing the within-cluster sum of squares. Often used for simple color-based segmentation or to separate an image into regions of similar intensity. -

Gaussian Mixture Models (GMM):

Models the data distribution with multiple Gaussian components. Each pixel is assigned to the component (cluster) that maximizes its posterior probability.

from sklearn.cluster import KMeans

import numpy as np

# Assume 'image' is a 2D grayscale array

pixels = image.reshape(-1, 1) # Flatten to (N,1)

# K-Means with 3 clusters

kmeans = KMeans(n_clusters=3, random_state=42).fit(pixels)

labels = kmeans.labels_.reshape(image.shape)

# 'labels' now contains values 0,1,2 representing the cluster each pixel belongs to

% Flatten the image into a column vector

pixels = double(image(:));

% Apply K-Means clustering with 3 clusters

n_clusters = 3;

[idx, ~] = kmeans(pixels, n_clusters);

% Reshape the clustered labels back to the original image dimensions

segmented_image = reshape(idx, size(image));

5. Deep Learning-Based Segmentation

With the advancement of deep neural networks, deep learning-based methods have dramatically improved segmentation accuracy and robustness, often surpassing traditional approaches in challenging, real-world scenarios.

-

U-Net:

Originally designed for biomedical image segmentation. Its symmetrical encoder-decoder architecture learns both context and precise localization. -

Mask R-CNN:

An extension of Faster R-CNN that performs instance segmentation. It detects objects and predicts a binary mask for each instance, widely used in applications like autonomous driving and robotics. -

Fully Convolutional Networks (FCN):

Replace the fully connected layers in standard CNNs with convolutional ones, enabling end-to-end pixel-wise classification.

Although these methods typically require large labeled datasets and significant computational resources (often GPUs), they are currently the state-of-the-art for many complex segmentation tasks.

Applications of Image Segmentation

Segmentation is essential across a multitude of domains:

-

Medical Imaging:

Automatic detection and delineation of tumors, organs, or lesions in modalities such as MRI, CT, and ultrasound. -

Autonomous Vehicles:

Lane detection, pedestrian recognition, and obstacle avoidance rely on consistent segmentation in real-time. -

Satellite Imaging:

Land cover classification, vegetation mapping, and urban development monitoring leverage segmentation of large-scale remote-sensing imagery. -

Security Systems:

Face, fingerprint, or iris segmentation is critical for biometric authentication and identification.

Challenges and Considerations

Despite the variety of techniques, segmentation remains a challenging field:

-

Varying Illumination and Contrast:

Changes in lighting can significantly affect pixel intensities, complicating thresholding and region-based approaches. -

Complex Shapes and Textures:

Real-world objects often lack uniform color or intensity; advanced methods or deep learning may be required to segment them accurately. -

High Computational Cost:

Processing large or high-resolution images can be expensive, especially for sophisticated algorithms (e.g., watershed, 3D volumetric data). -

Choosing the Right Method:

No single technique is universally best. The choice depends on the nature of the images, noise levels, domain requirements, and computational constraints.

Further Learning Resources

For those interested in diving deeper into image segmentation, the following resources offer tutorials, libraries, and theoretical foundations:

- OpenCV - Computer vision library – Provides ready-to-use segmentation functions.

- scikit-image - Image processing in Python – Includes thresholding, region growing, and more.

- Coursera - Digital Image Processing – In-depth online courses covering traditional and modern segmentation methods.

- Image Segmentation (Wikipedia) – A high-level overview and links to research papers.

- Digital Image Processing by Gonzalez & Woods – A classic textbook containing fundamental segmentation algorithms and theory.

Interactive Demos

Below are live demos illustrating some key segmentation methods—Thresholding, Region Growing, and basic K-Means Clustering—directly in the browser.

This demo shows how a simple global threshold can segment foreground vs. background. Drag the slider to select a threshold (0-255). All pixels with an intensity above this value will be set to white (foreground), and the rest to black (background).

Click on the image to pick a "seed" pixel. The algorithm will expand outwards from that seed, adding neighboring pixels that are within a specified intensity difference. Use the slider to adjust the tolerance.

The image is segmented into k clusters based on color similarity (in RGB space). Use the slider to change the number of clusters and see how the regions change.